What is ML Net and why should developers use it?

8 min read - 23 Aug, 2023I realised that I left this blog article blank, and It would only be a disservice to the AI Phenonemon if I didn't complete it. It turns out that I have been pondering over a project that will have longevity, I mean sure anyone can create a proof of concept, but as I have done with my news aggregator I wanted to build something that would have scale and longevity. It turns out that the best idea was for my Gallery, a place where I currently have a bit of a process going.

Currently I have the following flow on my site:

- Camera uploads to FTP

- A .Net8 application picks up new images in the FTP Site

- The image is processed, including making thumbnails and parsing out the meta information

- This information is uploaded to MongoDB

- Images are then approved in my website Admin Area

What I have done, is applied ML .Net to the image processor so that it can classify images it is processing and add titles for me, because I am a lazy developer.

First thing I did was follow the getting started tutorial here: ML.NET Tutorial | Get started in 10 minutes | .NET (microsoft.com)

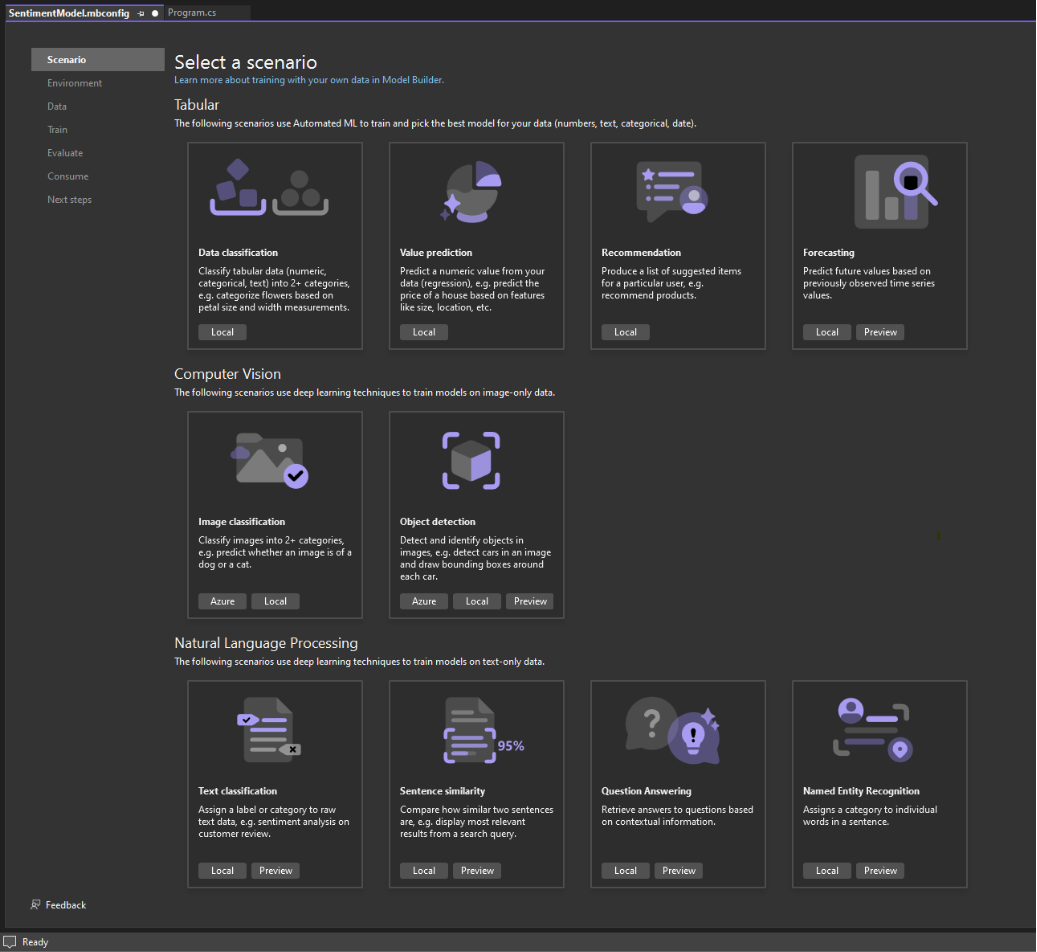

The most interesting part of this process, is the "Select a scenario":

For this example I am going to go with Computer Vision - Object Detection. I don't want to classify the images into categories, I want to detect all the objects in my photographs so that the title it produces is of a decent quality.

Next, I am asked whether I want to host locally, or in Azure, Azure can be expensive, so I checked the Azure calculator, and it turns out I get 5000 transations free:

| 5,000 free transactions per month 20 transactions per minute |

That's pretty sweet for this project, however, it turns out that the compute resources aren't free, we are going to use our local machine.

Next we need to add some data, the Vott library has been discontinued, so it makes sense to use Coco. This is where the plot thickens, we have the option to pre-load our ML .Net application with image labelling, and we can use Vott or Coco

It turns out Bing has an answer for us:

The COCO dataset (Common Objects in Context) is a widely used large-scale labeled image dataset in the field of computer vision. Here’s what you need to know about it:

Purpose and Contents:

- The COCO dataset was created with the goal of advancing image recognition. It contains challenging, high-quality visual data for various computer vision tasks.

- The dataset includes annotations for:

- Object Detection: Identifying objects within images.

- Image Segmentation: Segmenting objects into distinct regions.

- Keypoint Detection: Locating specific points (e.g., joints) on objects.

- Stuff Segmentation: Identifying materials or objects with no clear boundaries (e.g., sky, grass).

- Caption Generation: Generating descriptive captions for images.

- It covers a wide range of everyday scenes and objects.

Dataset Details:

- Over 200,000 images (out of a total of 330,000) are labeled.

- Contains 1.5 million object instances across various categories.

- Includes 80 object classes (e.g., person, car, chair) and 91 stuff categories (e.g., sky, street, grass).

- Each image has 5 captions.

- Also provides 250,000 people annotations with 17 different key points (used for pose estimation).

Object Classes:

- The COCO dataset covers a wide range of objects. Some of the pre-trained object classes include:

- Person, bicycle, car, motorcycle, airplane

- Bus, train, truck, boat

- Traffic light, fire hydrant, stop sign

- Animals (e.g., bird, cat, dog, horse, elephant)

- Everyday items (e.g., chair, table, TV, laptop, phone)

- Food (e.g., banana, apple, pizza, cake)

- And many more!

Benchmarking:

- Researchers and practitioners use COCO as a standard benchmark for evaluating the performance of state-of-the-art computer vision algorithms.

- It’s commonly used to compare real-time object detection models like YOLOv4 and YOLOv7.

In summary, the COCO dataset plays a crucial role in advancing computer vision research by providing diverse and challenging visual data for training and evaluating models1234. If you’re working on image-related tasks, COCO is a valuable resource to explore! 📸🔍

This sounds like what we need, a pre-trained model of data, that can be used to classify the objects in our future images. So I headed over to the Coco Datsets: COCO - Common Objects in Context (cocodataset.org).

This files are pretty huge, but here is what I found:

There is also a nifty little tool called FiftyOne FiftyOne — FiftyOne 0.24.0 documentation (voxel51.com) which is a UI for training datasets, remember that AI is all about the model you give it

I also stumbled upon this as a tutuorial:

COCO Dataset: A Step-by-Step Guide to Loading and Visualizing (machinelearningspace.com)

The tutorial above allows you run python in the cloud and run a wget to get the required libraries, it will also extract the Zip's and delete them once extracted.

To experiment with the dataset for object detection, you can use the annotation file either the “instances_val2017.json” or the “instances_train2017.json” files, as both have the same format. However, the “instances_train2017.json” file is large in size, so it is easier to work with the smaller “instances_val2017.json” file.

Ultimately, what we are doing is finding a set of images with pre-loaded data to train our application, and hopefully, it will use it's knowledge moving forward. So at this point let's just recap where we are:

- We have used ML .Net to create our first Machine Learning application

- We have used Google Collab to download our Coco Datasets for our machine learning model

- We have used Google Collab to extract our Coco Datsets ready to train our machine learning application

- We have loaded our Coco Instances data into the ML. Net application

- Also, don't forget to download the images that it needs to clasify too, these need to be in the same location as the json file.

Hit train and the ML .Net engine will train itself based on the modelled data, at this point this can take a while, so pat yourself on the back for getting this far, and go and have a beer and falafel.

In Summary we have taken a python based concept and using ML .Net which seems to be a wrapper for pytorch we are installing images and pre-loading json configs of Coco training models.

Developed by Shaltiel Shmidman, this extension library facilitates seamless interoperability between.NET and Python for model serialization, simplifying the process of saving and loading PyTorch models in a.NET environment.

At this point pay attention to the output window, visual studio will need to download the pytorch llm bin file, it needs this to process all the is on and classification images we have provided, look out for the following output.

[Source=AutoMLExperiment-ChildContext, Kind=Trace] [Source=ObjectDetectionTrainer; TrainModel, Kind=Trace] Channel started

[Source=AutoMLExperiment-ChildContext, Kind=Trace] [Source=ObjectDetectionTrainer; Ensuring model file is present., Kind=Trace] Channel started

[Source=AutoMLExperiment-ChildContext, Kind=Trace] [Source=ObjectDetectionTrainer; Ensuring model file is present., Kind=Trace] Channel finished. Elapsed 00:00:00.0046286.

[Source=AutoMLExperiment-ChildContext, Kind=Trace] [Source=ObjectDetectionTrainer; Ensuring model file is present., Kind=Trace] Channel disposed

[Source=AutoMLExperiment-ChildContext, Kind=Trace] [Source=ObjectDetectionTrainer; TrainModel, Kind=Trace] Starting epoch 0

[Source=AutoMLExperiment-ChildContext, Kind=Info] [Source=ObjectDetectionTrainer; TrainModel, Kind=Info] Row: 50, Loss: 3.37559669220563

[Source=AutoMLExperiment-ChildContext, Kind=Trace] [Source=ObjectDetectionTrainer; TrainModel, Kind=Trace] Finished epoch 0

Depending on your cpu and the number of epoch's this will take a while, so I will conclude this article with a link about epochs:

Understanding the Key Concept for Optimizing Model Performance (machinelearninghelp.org)

An epoch is one complete pass of the entire training dataset through the learning algorithm. This is configured in the Advanced training options:

Related Products

We fund the site via affiliate links via related products, this helps us to continue providing free content.An article about Microsoft’s answer to a machine learning engine for developers.

.svg)